It supports 100+ data sources ( including 30+ free data sources) and is a 3-step process by just selecting the data source, providing valid credentials, and choosing the destination. Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK,s, and Streaming Services and simplifies the ETL process. To know more about Apache Airflow, click here. In itself, Airflow is a general-purpose orchestration framework with a manageable set of features to learn.

Elegant: Airflow pipelines are explicit and straightforward.

Moreover, it enables users to restart from the point of failure without restarting the entire workflow again.

AIRFLOW OPERATOR CODE

AIRFLOW OPERATOR SOFTWARE

The increasing success of the Airflow project led to its adoption in the Apache Software Foundation.Īirflow enables users to efficiently build scheduled Data Pipelines utilizing some standard features of the Python framework, such as data time format for scheduling tasks. They used a built-in web interface to write and schedule processes and monitor workflow execution. In 2014, Airbnb developed Airflow to solve big data and complex Data Pipeline problems. Introduction to Apache Airflow Image CreditĪpache Airflow is an open-source, batch-oriented, pipeline-building framework for developing and monitoring data workflows.

AIRFLOW OPERATOR HOW TO

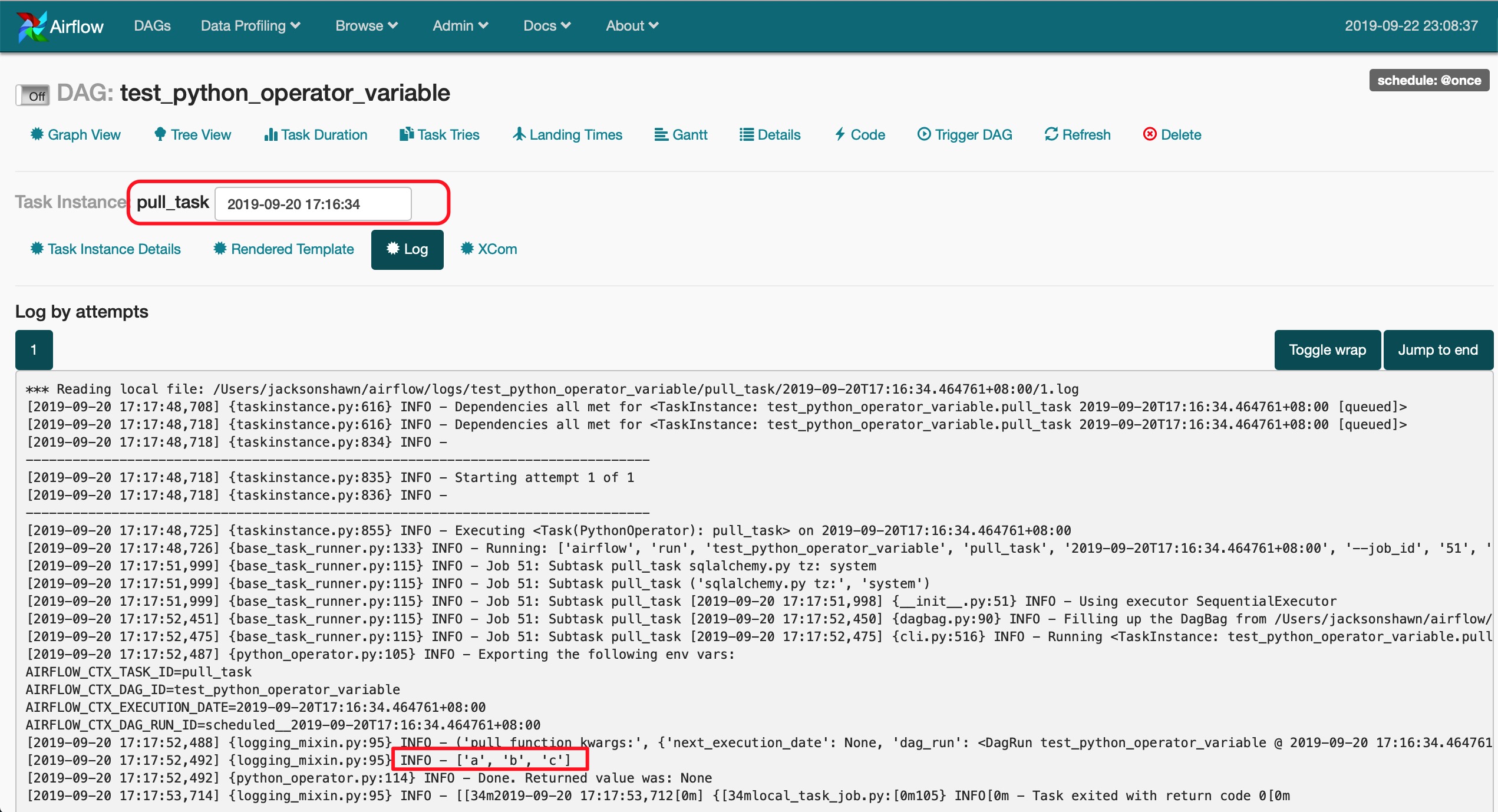

This article will guide you through how to install Apache Airflow in the Python environment to understand different Python Operators used in Airflow. 6) Python Operator: _operator.DataFlowPythonOperatorĪs a result, Airflow is currently used by many data-driven organizations to orchestrate a variety of crucial data activities.Introducing Python Operators in Apache Airflow.Getting started with Airflow in Python Environment.Simplify Data Analysis with Hevo’s No-code Data Pipeline.

0 kommentar(er)

0 kommentar(er)